Starbridge Engineering Labs: Meta-prompting for superhuman context engineering

Our founder once posed the question: how would you build the best ranking of how effective each government & school official is, both in the US and throughout the world? One that was the equivalent of advanced analytics showing a sports player's contribution value to the team.

In Starbridge, we have a number of tools and data-sources for aggregating information on government & schools officials, from AI web agents, to private data sources, to analysis agents that can run prompts over other inputs.

However the big challenge with this problem is actually about context engineering. Context would run out after a small number of results.

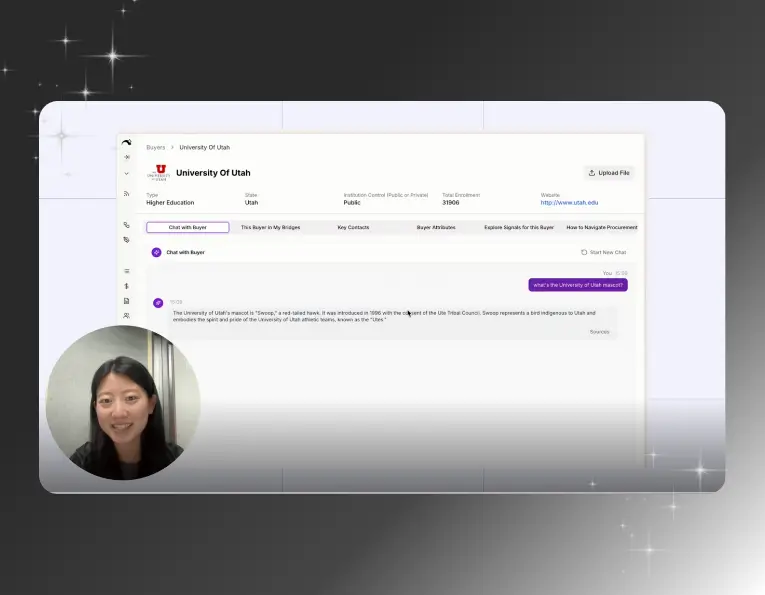

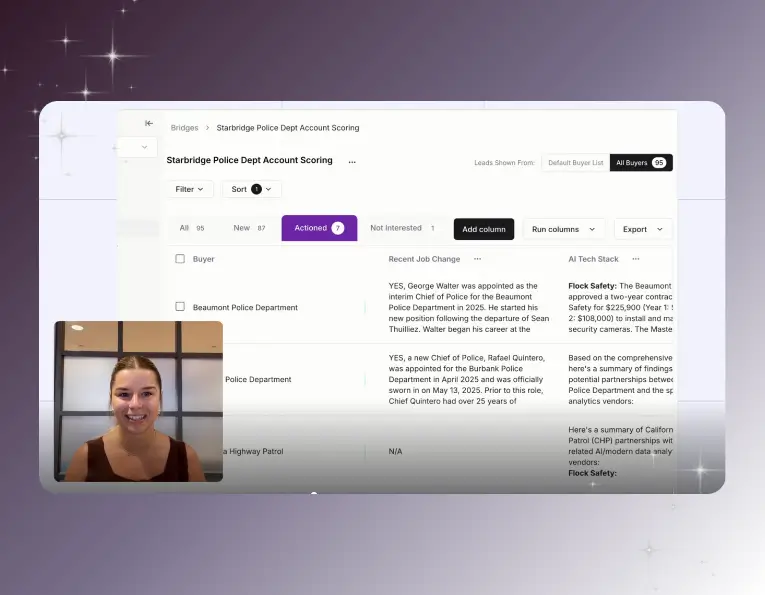

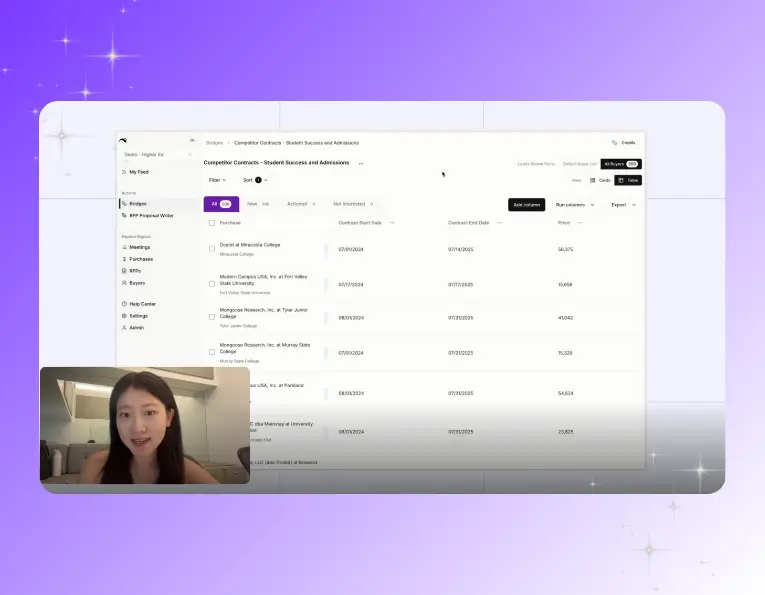

We started by solving the problem by building what we call Bridges. Bridges is an AI powered spreadsheet that allows you to run each of these agents as a column. The value of this is that we can break down prompts into steps and build up to a more reliable output.

For example, if the action I want to perform is to find cities that are having problems cleaning their streets and the contact information of the person in charge of cleaning and write + send personalized emails to them, in Bridges at each step I can just run one agent, first I can run a search agents on finding the list of cities, then I can run a contact information agent for that city, then I can run an agent that generates the email by looking at the context of the initial response from the search agent looking for the cities relevant problem.

However, where things get interesting is planning which agents to select and how to prompt/use them…

The Need for Meta-Prompting

Now let’s imagine the user has a goal to rank every city and school official…

The challenge is not just about pushing go on a bunch of agents as columns, it’s about breaking down which agents to run with what context, prompts & acceptable cost.

OpenAI themselves acknowledge how difficult it is to write an effective prompt and have struggled to help users understand which of 4 or 5 models to select for a given task. Now imagine not just 4 or 5 models, but 100 or 1000 agents and agent permutations that are possible to run independent of the actual prompt and then the actual prompt generation itself.

This is the art of meta-prompting: the output of which is effective context engineering and the magic feeling for a user to type a crazy request and watch a series of agents/tasks perform them, breaking each step down with superhuman precision.

Meta-Prompting: Our Approach

Let’s go back to the initial task to rank government officials:

We started by focusing on just automating one step. Could we enable the user to output a natural language prompt and reliably select the right agent + create the right prompt and budget for simple tasks?

The meta-prompting engine leverages 3 main sources of information:

- Information about the user’s company/history

- Context on the various attributes we have on the bridge

- The meta-prompt from the user: IE: find me how this mayor's city has had economic growth or decline since the start of their tenure.

The metaprompter selects which agent to use, which options to select within that agent and then writes the ideal prompt.

Challenge #1: Understanding the intent

The user’s meta prompt lacks a lot of information, we need to derive the implicit intent from this and turn that into a more usable request. This is solved with typical prompt expansion techniques.

Challenge #2: Identifying relevant attributes for our prompt

Models don’t have the token budget for us to add all of our information into them. We need to apply some context engineering principles here to provide the most relevant information in our prompt to improve downstream model’s performance. We applied filtering & ranking steps here to determine the optimal context for the prompt.

Challenge #3: Crafting the actual Prompt

Generally we’ve tried to incorporate best prompting strategies here from various sources and turn them into an agentic flow.

We ensure that:

- The prompt has a good structure (clear delineation between task / tools / instructions / examples)

- Apply declarative phrasing where possible

- Define good input / output structure

We borrow a lot of the principles from DSPy on how to approach automatic prompt optimization.

How much time is it saving for users

The metaprompter has become one of our top used features, saving each customer up to 50% of their time in building analyses within the platform.

The Future

We are building an agentic planning team to focus on multi-step planning.

Most notably, we plan to introduce more of a concept of planning, where the agent can not just reliably perform one step, but a series of steps that the user can see visibility and intervene at the appropriate moments.

We plan to also take on some fun technical challenges such as incorporating DSPy, an automated LLM-as-a-judge eval, dynamically determining intent complexity for model choice and adding guiding examples for the prompts.

If you’re interested in solving this kind of AI problem, please reach out!

Explore our use case library

Ready to book

more pipeline?

See your top accounts that are ready-to-buy today

.svg)